L'examen manuel de 532 échantillons acoustiques de gibbons de Hainan a été achevé, y compris ceux obtenus lors du suivi et de l'observation des gibbons à l'aide d'un enregistreur portable et ceux obtenus à l'aide d'un enregistreur automatisé. Au cours du processus de sélection, trois qualités d'enregistrement ont été initialement classées, à savoir haute, moyenne et basse. 44 enregistrements de haute qualité provenant de sept individus ont été obtenus. Les sept interlocuteurs individuels étaient GAM1、GBM1、GBSA、GCM1、GCM2、GDM1、GEM1, où la lettre après "G" représente le numéro du groupe familial et la lettre après "M/S" représente le numéro individuel de l'homme adulte/de l'homme subadulte. Seuls 40,9 % des enregistrements ont été effectués manuellement. Les fichiers bruts de tous les enregistrements automatisés ont été fournis par l'équipe du professeur Wang Jichao, et les données correspondantes ont été sauvegardées à l'Institut du parc national de Hainan.

Les coefficients Mel-frequency cepstrum (MFCC) sont une méthode d'extraction des caractéristiques de l'enveloppe de fréquence par cepstrum après affaiblissement des informations de haute fréquence sur la base de l'audition humaine[1], qui a un large éventail d'applications dans le domaine de l'acoustique humaine et de la bioacoustique. Dans cette étude, les MFCC et les différences de premier et de second ordre (△、△2) sont utilisés pour réaliser une extraction automatisée des caractéristiques.

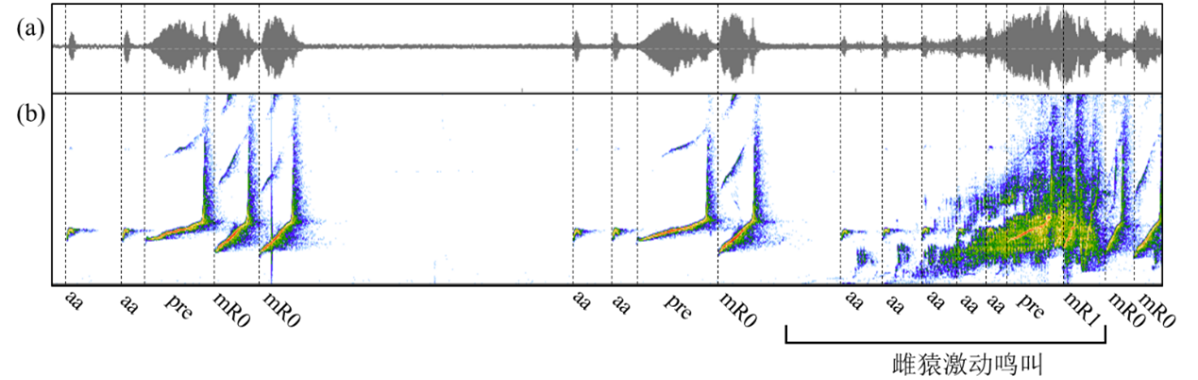

5 notes caractéristiques du gibbon mâle de Hainan ont été identifiées (Fig.1), dont la note boom, la note aa, la note pré-modulée, la note modulée-R0 et la note modulée-R1.

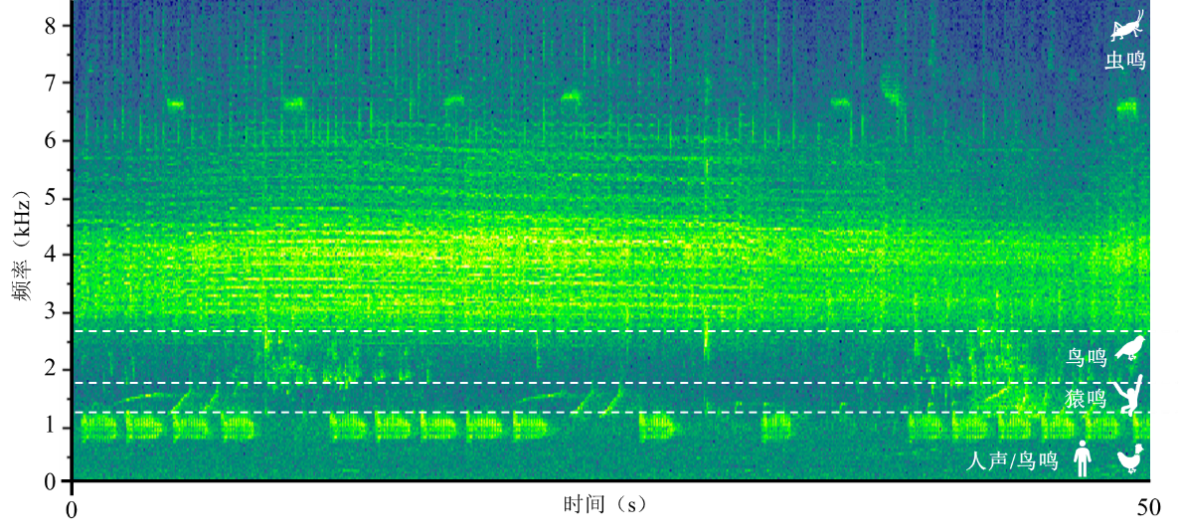

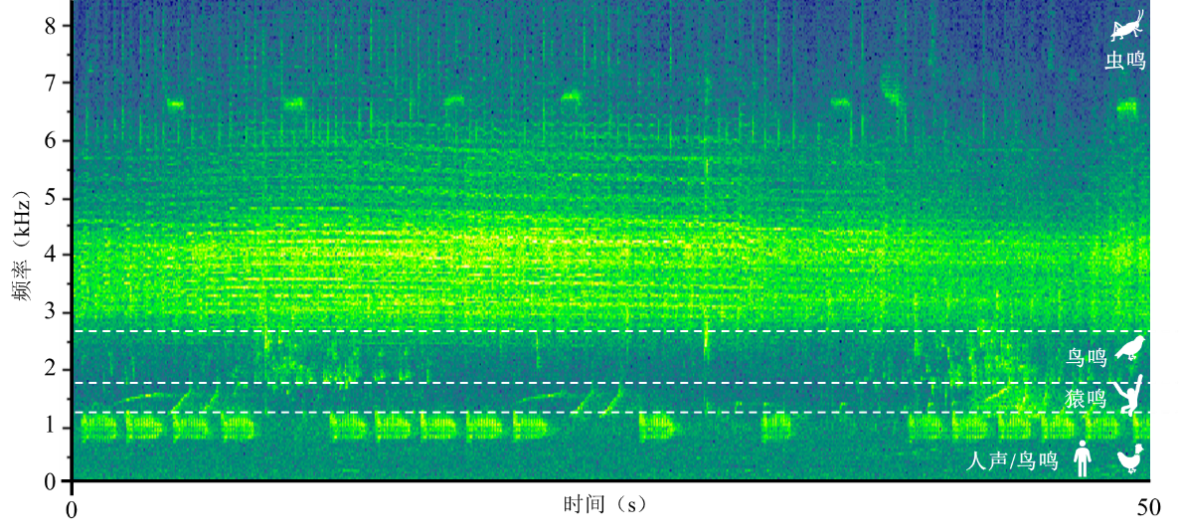

Selon l'hypothèse de la niche acoustique, les cris des différentes espèces se différencient dans les domaines du temps et de la fréquence (voir Fig. 2), de sorte que l'extraction de caractéristiques dans une gamme de fréquences spécifique peut réduire considérablement l'influence du bruit, et plus la gamme de fréquences délimitée est petite, plus il est probable que davantage de bruit sera exclu. En outre, lorsque la structure de chaque unité minimale de reconnaissance (MRU) est identique, la difficulté de la reconnaissance est considérablement réduite.

Compte tenu de ce qui précède, dans cette phase de la recherche, nous avons essayé (1) d'appliquer uniquement pre et (2) d'utiliser pre + n×mR0 comme MRU, respectivement, et de comparer les résultats de la classification afin de déterminer l'extraction de caractéristiques la plus appropriée dans les travaux ultérieurs. Dans le cas de l'annotation vocale, toutes les étapes ci-dessus peuvent être mises en œuvre automatiquement par le code du langage R.